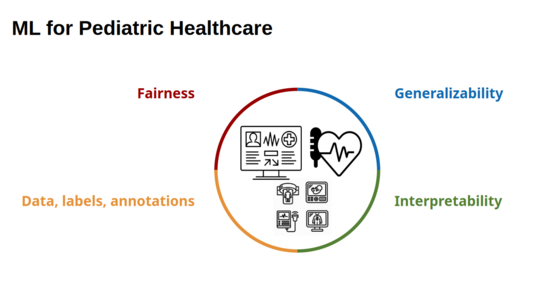

Areas of Interest

Fairness

Machine learning models can unintentionally reproduce or amplify biases present in healthcare data. This can lead to unequal performance across patient groups defined by age, sex, ethnicity, or socioeconomic status. We aim to design methods that identify, measure, and mitigate bias, ensuring that AI tools provide equitable benefits for all patients.

Generalizability

Models trained on limited or homogeneous datasets often fail when applied to new hospitals, devices, or patient populations. We investigate strategies such as domain adaptation, multi-site training, and robust modeling approaches to build systems that transfer well across diverse clinical settings. Generalizable models are essential for safe and reliable deployment in real-world medicine.

Data and Annotation

Medical data are often scarce, costly to annotate, and vary in quality. We explore approaches such as semi-supervised learning, weak supervision, active learning, and efficient annotation strategies to maximize the value of available data. Addressing these challenges is crucial for creating models that learn effectively from limited pediatric and clinical datasets.

Interpretability

For clinical adoption, models must not only be accurate but also understandable. We study methods that make AI predictions transparent and actionable, allowing physicians to trust, validate, and intervene in the decision-making process. Interpretability bridges the gap between complex algorithms and the responsibility of clinicians toward patient care.