WDM: 3D Wavelet Diffusion Models for High-Resolution Medical Image Synthesis

Due to the three-dimensional nature of CT- or MR-scans, generative modelling of medical images is a particularly challenging task. We propose WDM, a wavelet-based medical image synthesis framework that applies a diffusion model on wavelet decomposed images. [1]

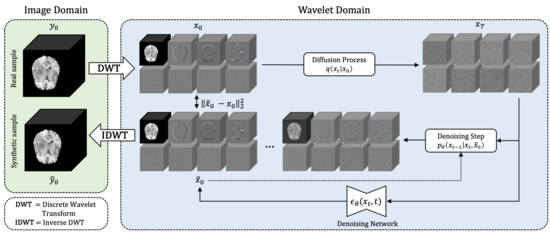

Our proposed approach (Fig. 1) follows a concept that is closely related to Latent Diffusion Models but replaces the first-stage autoencoder with the discrete wavelet transform, a dataset-agnostic, training-free approach for spatial dimensionality reduction.

Experiments on unconditional image generation tasks demonstrated state-of-the-art image fidelity (FID) and sample diversity (MS-SSIM) scores compared to recent GANs, Diffusion Models, and Latent Diffusion Models. The proposed method is capable of producing high resolution images (2563) on standard hardware (a single 40GB GPU).

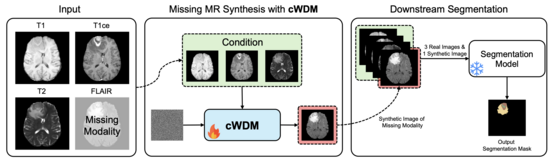

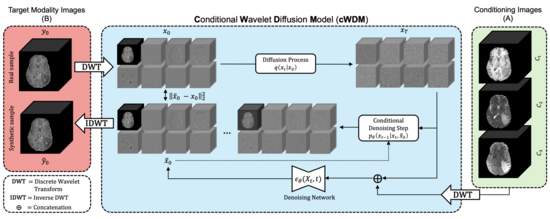

We additionally present a conditional WDM [2] for solving image-to-image translation tasks on high-resolution volumes (Fig. 3). We apply our model to a missing modality synthesis task (Fig. 2) that allows for the application of pre-trained brain tumor segmentation models – even if required image modalities are missing.

More information and relevant links can be found on the project page: https://pfriedri.github.io/wdm-3d-io/

[1] Friedrich, Paul, et al. "Wdm: 3d wavelet diffusion models for high-resolution medical image synthesis." MICCAI Workshop on Deep Generative Models. Cham: Springer Nature Switzerland, 2024.

[2] Friedrich, Paul, et al. "cWDM: Conditional Wavelet Diffusion Models for Cross-Modality 3D Medical Image Synthesis." arXiv preprint arXiv:2411.17203. 2024..