Intuitive Surgical User Interface

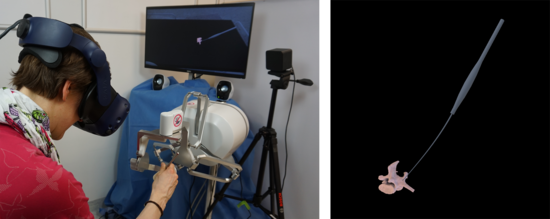

Surgical robots are often controlled by the surgeon through a teleoperation interface. However, to date there are still only limited guidelines on how to design such an interface for it to be intuitive for the user. Thus, this project is devoted to investigate how an intuitive user interface can be created that allows the surgeons to easily plan, simulate and perform the surgical tasks at hand.

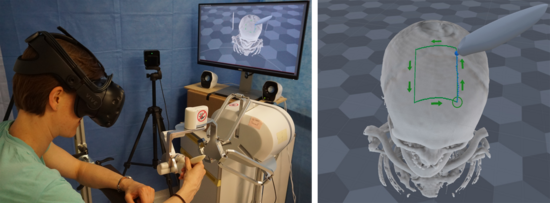

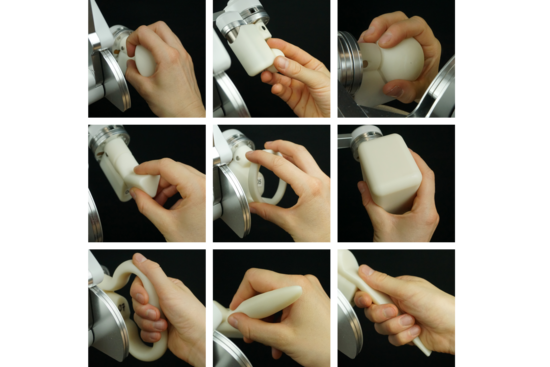

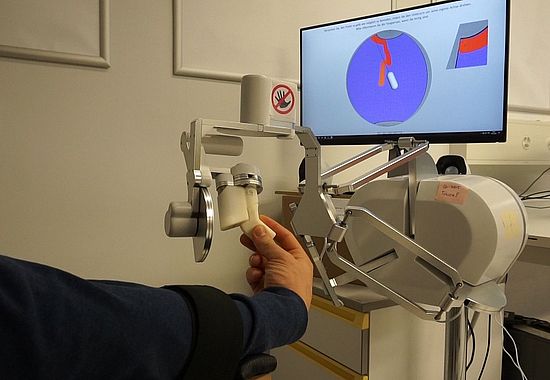

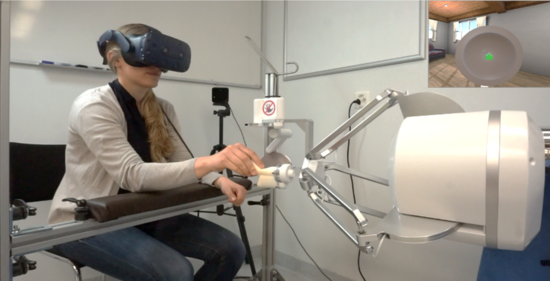

The project’s focus lies on two different aspects of the user interface: the telemanipulator handle and the haptic feedback for both simulation of and guidance during the intervention. As to date it is not known how the handle of a telemanipulator influences teleoperation performance, different handles are developed, evaluated and compared. This will allow us to choose the most intuitive handle for our application. In addition, we are working on simulating medical data sets (e.g. CT data) haptically to add a new modality to current virtual reality planning and simulation systems.

The projects direction of novel possibilities for simulation have been on hold since August 2020. Efforts to acquire funding covering further work are ongoing. As soon as more funding could be acquired, this promising project will be resumed.

For more information, feel free to contact the project leader Dr. Nicolas Gerig.

E. I. Zoller, N. Gerig, P. C. Cattin, G. Rauter, “The Functional Rotational Workspace of a Human-Robot System can be Influenced by Adjusting the Telemanipulator Handle Orientation”, in IEEE Transactions on Haptics, 2020.

E. I. Zoller, B. Faludi, N. Gerig, G. F. Jost, P. C. Cattin, G. Rauter, “Force quantification and simulation of pedicle screw tract palpation using direct visuo-haptic volume rendering”, International Journal of Computer Assisted Radiology and Surgery, 2020.

B. Faludi, E. I. Zoller, N. Gerig, A. Zam, G. Rauter, P. C. Cattin, “Direct Visual and Haptic Volume Rendering of Medical Data Sets for an Immersive Exploration in Virtual Reality,” in International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI 2019), LNCS 11768, pp. 29-37, 2019.

E. I. Zoller, P. C. Cattin, A. Zam, G. Rauter, “Assessment of the Functional Rotational Workspace of Different Grasp Type Handles for the lambda.6 Haptic Device,” 2019 IEEE World Haptics Conference (WHC), pp. 127-132, 2019.

E. I. Zoller, P. Salz, P. C. Cattin, A. Zam, G. Rauter, “Development of Different Grasp Type Handles for a Haptic Telemanipulator,” in Proceedings of the 9th Joint Workshop on New Technologies for Computer/Robot Assisted Surgery (CRAS 2019), pp. 22-23, 2019.

M. Eugster, E. I. Zoller, L. Fasel, P. Cattin, N. F. Friederich, A. Zam, and G. Rauter, “Contact force estimation for minimally invasive robot-assisted Laserosteotomy in the human knee,” In Joint Workshop on New Technologies for Computer/Robot Assisted Surgery (CRAS), vol. 8, pp. 39-40, 2018.

E. I. Zoller, P. Salz, A. Zam, P. C. Cattin, G. Rauter, “Intuitive Haptic Telemanipulator for Laser Osteotomy with a Robotic Endoscope,” in Hand, Brain and Technology, p. 91, 2018.

E. I. Zoller, G. Aiello, A. Zam, P. C. Cattin, G. Rauter, “Is 3-DoF position feedback sufficient for exact positioning of a robotic endoscope end-effector in the human knee?,” 2017 IEEE World Haptics Conference (WHC), 2017.

Completed

- Semester Thesis: Development of a parameter identification recipe for 3-DoF hapticguidance (PDF, 39.64 KB)

- Master Thesis: Intuitive haptic telemanipulator for a robotic endoscope (PDF, 615.43 KB)

- Master Thesis: Enhancement of telemanipulation: A survey on haptic feedback and motion scaling for a robotic endoscope

- Master Thesis: Interactive approach path planning system for insertion of a robotic endoscope in the human knee (PDF, 276.99 KB)

- Master Thesis: Assessment of different grasp type handles for a teleoperated 6-DoF peg-in-hole task with the lambda.6 device

- Master Thesis: Augmenting a custom haptic input device handle with force feedback for intuitive grasping